0.14.2.6

Posted by ququasar in Uncategorized on November 25, 2022

0.14.2.6

Changelog

- Added localizable strings and tooltips for emotion names, descriptions and adjectives.

- Added localizable strings for a large number of AI routine names, as utilized by the thought logger

- Added localizable strings for energy delta’s, shown on the “Energy” details tab

- Added missing localizable strings on the Customize Creatures menu page

- Added missing localizable strings for creature behavior states.

- Added missing localizable strings for temperature reporting

If you’ve been translating the game, you’ll find all these new strings appended to the bottom of “Species ALRE\Language\en-AU.txt”. Copy them into your own language txt file to begin translating.

And also, thank you!

0.14.2.5

Changelog

- Fixed an egg-related crash that happened when loading a game.

0.14.2.4

Posted by ququasar in Uncategorized on November 7, 2022

Changelog

- Tentative fix for a creature teleportation bug associated with pouncing attacks.

- Main menu buttons are now localizable (they don’t look quite as good as before, but it’s not too bad).

- Fix a crash when tutorial header text is translated.

- Added language name to the localization strings.

- Fix some typo’s in the english localization.

0.14.2.3

Changelog

- Tentative fix for MacOS version crashing on startup.

- Fix creatures inside eggs in the nursery dying before they hatch.

- Fix imported creatures dying as soon as they are imported.

- Fix a number of localization errors introduced in 0.14.2.2

0.14.2.2

Posted by ququasar in Uncategorized on October 30, 2022

Changelog

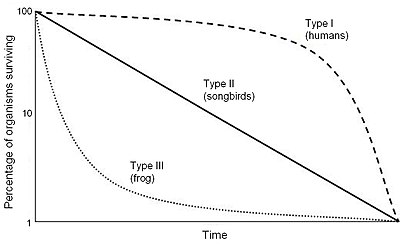

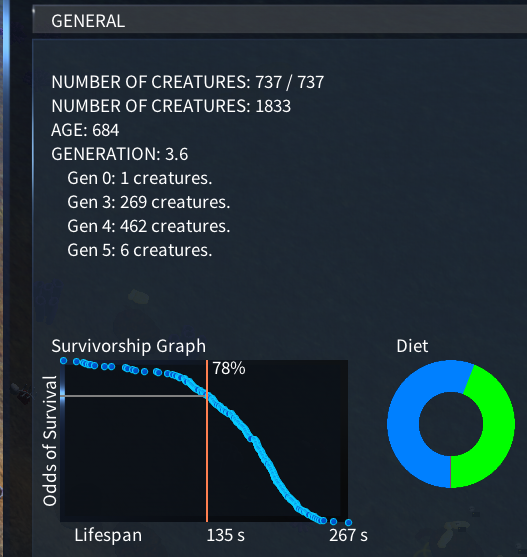

- Add a Survivorship Curve to the Species Overview screen, derived from actual lifespan data taken from the simulation.

- “A survivorship curve is a graph showing the number or proportion of individuals surviving to each age for a given species or group (e.g. males or females).” – Wikipedia

- Fix an energy exploit among creature who cannibalize their own eggs.

- Overwrite the en-AU.txt file when Species is run, to enable text updates from my end.

- Fix a crash when the Import Creature screen is opened.

Where to next – 0.15.0

Posted by ququasar in Uncategorized on October 24, 2022

You’ll have to forgive the lack of images and concept art, I ran out of time.

Lately I’ve been moving towards fatigue, sleep, temperature and exposure to the elements all needing to be much more important survival needs than they are right now. Let’s put all of these mechanics under the umbrella of ‘habitat’. Eventually, habitat will be almost as significant as food as far as survival and selection pressures go, one they will need to balance against seeking food in order to survive long enough to reproduce.

Unfortunately, I find the current vegetation model wholly inadequate to support my plans for this stuff. It has failed me for the last time. So it’s time to take what I’ve learned, throw the whole system into a volcano, and build a cleaner, more elegant and far more ambitious replacement.

Here’s the design goals for the new system, in rough order of priority:

- The tree’s need to be 3 dimensional structures.

- Multiple 3d interaction points for fruit, foliage, shelter, perches and nests.

- The tree types need to serve a purpose in the ecosystem.

- Shelter tree’s should not provide food and food tree’s should not provide shelter. This will allow me to ensure the two do not generally grow in the same location.

- The tree’s need to be performant.

- They’re not the centerpiece of the simulation: their role doth not merit that they drink deeply from the fountain of FPS.

- The tree’s should evolve.

- Evolving tree’s is probably the second most requested feature, after flight. It was always planned.

So, let’s discuss!

Trees need to be 3d structures

Currently, trees are effectively a single coordinate in the world: an interaction point that creatures can go to to get food and/or shelter. This creates a notable discrepancy with the tree models that represent them: the models can be quite large and have multiple points of interest on them, such as fruit, foliage and branches, that the creature can’t really interact with.

The thing I find most bothersome about this is how it affects fruit. A creature can interact with the trunk of a tree to eat however many fruit are clustered around it’s base, and they all disappear at once when consumed. This is silly, and having so many fruit objects associated with a single food source adds a ton of clutter and makes the game visually difficult to read.

Add to this the fact that the fruit are, quite frankly, ugly. I made them brightly-coloured thinking it would make it easy to see when a plant had fruit available, but instead it just added even more business to the game.

So, 3d tree’s. Large tree’s in particular will be the center of a cloud of points, representing food sources, shelter, perches and nesting sites. The last two won’t be used immediately (and may actually be one and the same), but they will lay the groundwork for future features.

With each fruit being individually edible and tree’s being rarer and larger, I’m hoping the game will appear a lot cleaner.

Foliage is trickier. Unlike fruit, which can be turned off when far away, foliage is vital to the tree’s appearance and needs to be visible from a distance. I can think of a few approaches, but I’ll need to experiment.

Each type of tree needs a purpose

Generally speaking, tree’s fulfil two vital needs in the simulation: food and shelter.

A creature that gets both of these from the same location is boring: it’s lifecycle consists of camping out around a tree and laying an egg when it reaches adulthood. There are ways to make that interesting, but it’s the exception rather than the rule.

So, I want to ensure that food and shelter are generally not available from the same tree. I’ll do this by categorizing them:

- Shelter tree’s will prioritize size, and will generate hollows, perches and a wide canopy to provide lots of shade. This is where creatures will rest. In a rainforest, these tree’s would make up the emergent layer.

- Symbiotic tree’s provide food, prioritizing the production of fruit under the assumption that creatures eating the fruit will also carry their seeds. These tree’s will be medium size and provide a canopy in fertile area’s.

- Predated tree’s also provide food in the form of foliage, but unlike fruit tree’s they have no interest in feeding creatures and will trend towards defense an inefficiency. Pure herbivores will get the most out of these.

As a general rule, all tree’s will have more energy in their foliage not be eaten. should be defended, whether by inefficiency, height, or actual defenses, while fruit is easily accessible but less common.

Performance is still a thing

Hoo boy is performance ever a thing. Non-deterministic simulations are the worst.

Currently, tree’s use a static instancing technique with no culling. This means all the tree’s are rendered all the time, even the ones off-screen. This might sound inefficient, but it doesn’t affect FPS under most conditions because Species runs primarily on the CPU, leaving the GPU with cycles to spare.

Static Instancing has a flaw, though: it’s Static. It works best when we send the tree’s to the GPU once, and don’t send them again, because sending data to the GPU is slow. Right now we get around this by sending only a small chunk of data each frame, allowing each tree to only be updated once every few seconds.

I’ll be exploring various ways to update things better: there’s a fork of Monogame that supports compute shaders, I need to check if the current version of Monogame supports vertex texture fetch (“Checked it. It doesn’t”, interjects future me), and try experimenting with a much smaller third vertex buffer that sends the dynamic info. All of those would potentially open doors.

Generally speaking I expect to have to make compromises in order to satisfy performance concerns, but I’ll push for better and take what I can get.

The tree’s have to evolve

Here we go. The big one. Evolving tree’s.

I will need a simple and versatile model for tree structure, that will allow for a lot of variety without being overly expensive. What I’ve settled on is a simple three part system: tree’s will have a stem, a crown, and a foliage layer, each with their own genes for scale and colour.

I don’t plan to do tree evolution in quite same way as it’s done with creatures, with every individual being unique and responsible for ensuring it’s own reproduction. Instead, I plan to implement a simpler and more conventional genetic algorithm: generate a set of mutations, rank them according to a fitness function, and the most optimal mutations are utilized for the next generation.

This is for a number of reasons, but the most important from a design-perspective is something I’ve learned from all my time working on Species: emergent evolution is ridiculously hard to control. Control is something I want in the tree’s, because they are a major pillar of the simulation. They need to provide food and habitat for the creatures, they need to grow in appropriate locations (lookin’ at you, land coral), they need to die off and be replaced as the biome drifts and changes, and so on.

Generally speaking, I want to aim for a realistic or semi-realistic art style for the plants. They may be made of parts that don’t normally go together, but the parts themselves will be lifted directly from terrestrial vegetation. Nothing too fantastical or alien: no giant mushrooms or hydrogen-filled balloon tree’s, but maybe the occasional thorny apple palm or flowering bananatato. Boab-dandilions. Rafflesia pines that grow stubby carrots instead of pinecones.

… suddenly the giant mushrooms aren’t looking so silly.

Bonus: other idea’s!

I do have a couple of additional idea’s for tree’s that may not survive contact with the codebase or may end up being postponed, to be implemented at a later date. They include…

- Fallen tree’s. It would be nice to have large tree’s fall over and become part of the environment at the end of their lives, growing moss and serving as an inhabitable ‘hollow log’ shelter until it decays.

- Meat trees. No, not like that. I mean things like termite mounds and grub-infested tree’s: sources of protein that carnivorous creatures with the right body parts can access.

- Coral tree’s. The simple solution would simply be to treat coral clusters as a type of tree and give them their own set of crowns and stems, but ideally underwater plants should have their own set of rules, allowing kelp to stretch to the surface and coral to grow together into large, habitable reefs.

I’ve got a lot more to say about the specifics of design and implementation, but I expect this one to take me a while and, full disclaimer, my day job has been throwing me a few curve balls lately. With that said, my motivation for Species remains high. Let’s see how this goes!

Cheers,

Quasar

By the way, the future feature unlocked by nesting sites is nests (obviously) and the feature unlocked by perches is flight. Flying requires somewhere to fly to.

Tutorial – How to Translate the Game

Posted by ququasar in Uncategorized on October 10, 2022

This is how you can translate Species into a language other than English, for those so inclined.

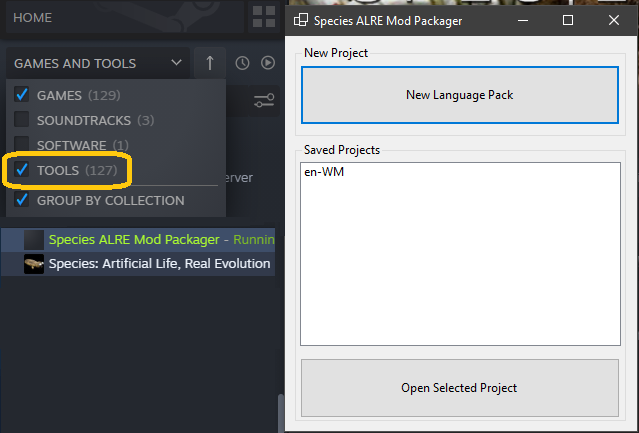

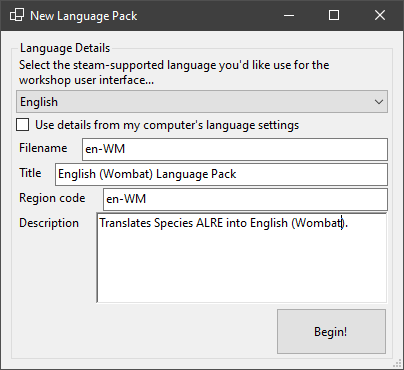

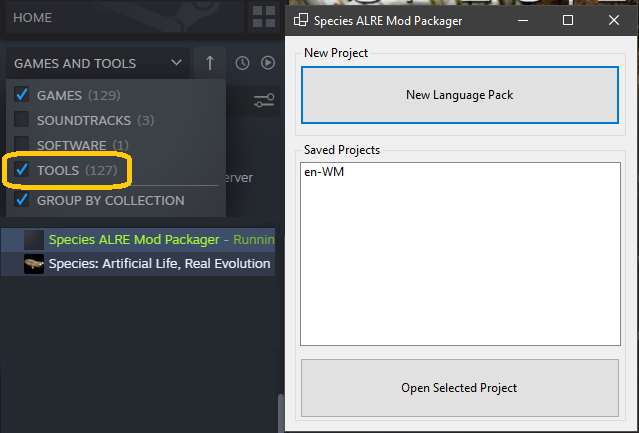

Step 1. Access the Species ALRE Mod Packager in Steam by turning on the “Tools” filter. Open up the tool, then click New Language Pack.

Note: The Mod Packager must be opened from Steam in order to publish anything to the Steam Workshop. Don’t try to run it from the exe.

Step 2. If the tool can’t work them out from your regional settings, uncheck “Use details from my computer’s language settings” and enter some basic details about your language. Then click Begin!

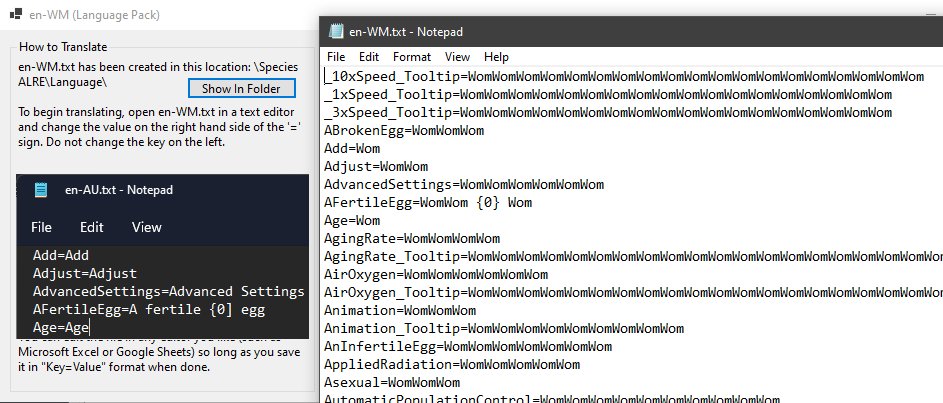

Step 3. At this point, the translation project has already been created and saved as a folder under \Species ALRE\Mod Packager\Projects\. You can close and re-open the tool without losing any work.

A text file has been created in the \Species ALRE\Language\ subfolder based on the region code you specified. You can click “Show In Folder” to go to it, and you can open it in any text editor (notepad, wordpad, notepad++) to begin translating.

Alternatively, you can open the file in Excel or Google Sheets if you prefer. Just remember to save it in the same “Key=Value” format you found it in.

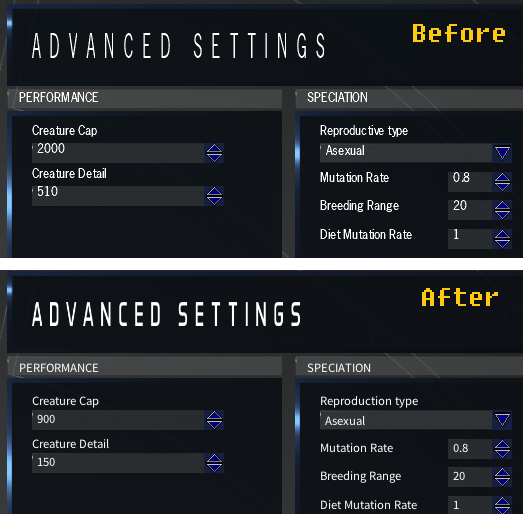

Step 4. You can save the text file and open Species: Artificial Life, Real Evolution at any time to see how the translation looks in-game.

Note 1: Do not subscribe to your own language pack if you have already published it to the steam workshop. If you do, any changes you make to this file will be reset every time you start the game.

Note 2: I expect there will be many translation keys that I am still missing: for example, I realized while spending far more time than was necessary setting up this joke spending exactly the right amount of time translating puny human English into the glorious language of Wom that I’d missed the “Ocean” dropdown. If you let me know when you find missing translation strings, I can add them to the default file and you can copy them from there into your version.

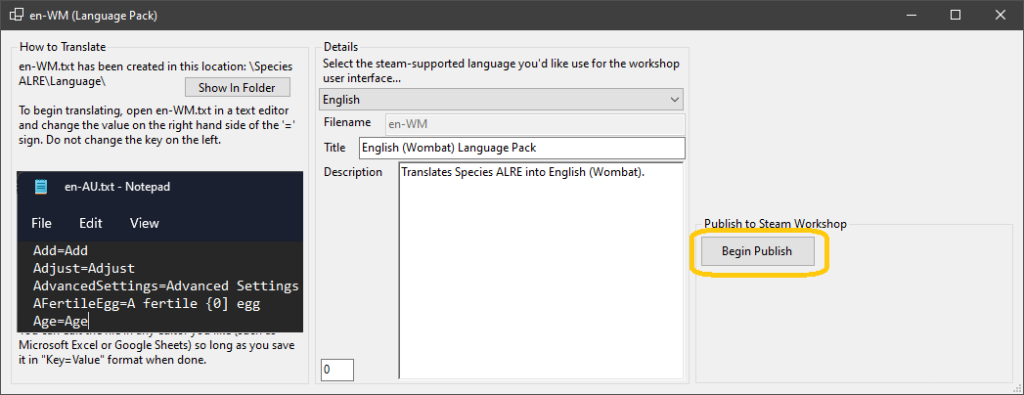

Step 5. Once the translation file is finished, it’s time to upload it to the steam workshop. If you’ve closed the Mod Packager, re-open it and use “Open Selected Project” to open the translation project. Now, click “Begin Publish” to do exactly that.

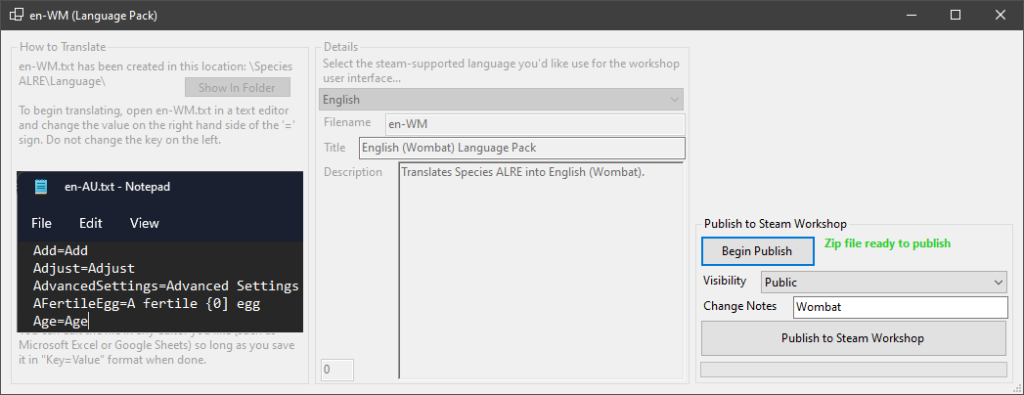

The tool has now created a Zip file under \Species ALRE\Mod Packager\Projects\Publish\[MyProject]\, ready to be sent to the steam workshop. Fill in the two last minute details: Workshop Visibility (Private, Public, Friends Only or Unlisted) and Change Notes, if you are updating a project already on the workshop, and click “Publish to Steam Workshop”.

Step 6. Your language pack is on the Steam Workshop! But you’ll see it still lacks a preview image, so set up something appropriate using the “Add/edit images & videos” link in the Owner Controls in the bottom right. I believe Steam enforces a <1MB filesize limit on preview images, so I recommend using *.jpg format for yours.

Finally, if you didn’t choose “Visibility: Public” when Publishing, you can do that now with “Change Visibility”, also in the owner controls in the bottom right. This will make your language pack available for anyone to download.

Happy translating,

Quasar

0.14.2.0 Released to Experimental

Posted by ququasar in Uncategorized on October 10, 2022

0.14.2.0 is now live on the experimental branch. This update does not include any gameplay changes, but instead includes an external tool:

The Species ALRE Mod Packager

This for-now bare bones tool will ultimately be expanded on to create, package and upload any type of mod to the Steam Workshop. For now the only option is Language Pack: Species ALRE can be translated into any language, and the pack shared with others on the Steam Workshop.

Several people have expressed an interest in translating the game into their own languages, so I look forward to seeing what comes of this.

You can find a tutorial on how to create language packs here.

Font Change

As a result of needing to support additional characters for new languages, the game’s text renderer had to be replaced, and I took the opportunity to select improved fonts for the game going forward. The difference is subtle, but there.

New Music

Finally, the game includes three new background music tracks:

- Speciation

- Abiogenesis, and…

- Punctuated Equilibrium

All three were composed by discord user Poliostasis specifically for the game.

Cheers,

Quasar

Up Next – Localization

Posted by ququasar in Uncategorized on October 3, 2022

It has come to my attention that humans use words, with their fingers and also their human face holes, and further, that not all humans use the same vocabulary of words. This seems wildly inefficient, but nevertheless must be accounted for.

So, after deciding that a global brainwashing effort would be more trouble than it’s worth, I’m now working on localizing Species, an effort which I will release as 0.14.2.

I want it to be possible for humans who aren’t me to translate the game into other languages, without needing to go through me to get it compiled. This will remove me as a potential bottleneck and allow community translators to see the results of their translation just by booting up the game.

This will be a 4 stage process:

1. Centralize the strings

All hardcoded text needs to be moved to a central class. This will be an ongoing effort: there is a *lot* of text in the game, some of which is utilized for more than just display. And things like the thoughtlogger and a lot of enum objects use the actual in-code object names, which are of course written in my native language of ‘Straylian.

But I don’t have to localize everything immediately: if done properly, the system will just fall back to english whenever a localized string is not available. It might not be pretty, but the game should be quite playable even if I only translate the most obvious strings.

This is ongoing.

2. Externalize the strings

Once we have it all the text in one place, we can put it into an external text file. I’ve chosen JSON for this, mainly because the formatting is a bit less bulky than XML.

One day I will settle on one technology for all my data files, but today is not that day.

Anyway, this stage is completed, as of today.

3. Desprite the font

Monogame uses Spritefonts to draw text: short version, a selection of characters from the font are drawn to a texture atlas, which is then drawn on the screen to display text to the user. Advantage: very efficient way to draw text. Disadvantage: can’t draw characters you haven’t pre-rendered to the atlas. So it won’t support the characters of [insert language here].

Monogame does include LocalizedSpriteFont, which renders the exact characters you need for a particular translation to the texture atlas… *if* you have the translation in a resource file at compile time. Which I absolutely won’t. I don’t want translators to have to get me to recompile and re-upload the game every time they need to tweak the translation library.

So, I’m looking for a third party library which does it all on the fly. I’ve found two: SixLabours.Fonts and FontStashSharp. Either way, I’ve gotta rip out a thoroughly integrated text drawing system and replace it with something else.

This hasn’t been started.

4. Steam the Workshop

I need to work out how Steam Workshop functions.

Well, I mean, technically I don’t. People could simply copy a translation file into the “Language” subfolder, it’ll work all the same. But learning how Steam Workshop works will allow me to make distributing language packs a lot easier.

More than that, though, turning on the Workshop will be the first step towards official mod support. Monogame isn’t exactly a mod-friendly framework, but I fully intend to expose more and more of the game as we move forward.

This I don’t even know how to do. I will have to read the user manual.

Geez, this is a lot of work just to support humanities persistent and entirely illogical refusal to consolidate basic communication protocols. We really need to develop one universal standard that covers everyone’s use cases.

Cheers,

Quasar, a perfectly normal human.

0.14.1.14

Posted by ququasar in Uncategorized on October 2, 2022

0.14.1.14 Changelog

- Fix a memory leak introduced in 0.14.1.12 that was causing an escalating series of freezes, ultimately leading to the game becoming non-responsive.

0.14.1.13 Changelog

- More consistent fossil generation.

- Fix a crash when loading a world.

- Fix a different crash when loading a world.

0.14.1.12

Posted by ququasar in Uncategorized on September 28, 2022

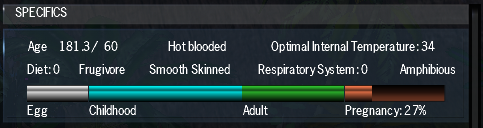

- Add a lifecycle progress bar to Creature Details – Overview page.

- Fix clade diagram problem that was causing long-term saves to become corrupt and fail to load.

- Clade diagram will reset on load when this happens.

- Keybindings disabled when typing into the Save/Export Creature filename

- Holding keys down (eg. backspace) will work correctly when typing into the Save/Export Creature filename

- Substantial refactoring behind the scenes to make memory-pooled objects (creatures, corpses, eggs & trees) easier and simpler to work with. Hopefully, this won’t cause any noticeable changes.

0.14.1.11

Posted by ququasar in Uncategorized on September 18, 2022

0.14.1.11 Changelog

- Change diet pie chart colours

- Add consumption percentage figures

- Fix crash when putting creatures in nursery

- Fix creatures not being lifted when being moved

- Fix Nursery UI disappearing when opening it by clicking on the tower

0.14.1.11 Changelog

- Add dietary pie chart to the clade diagram and sat map views.

- Fix teleporting pounce bug

- Fix liedownanddie micronap loop

- Fix a multithreaded related crash

- Fix creatures able to target locations beyond fences when running

- Fix creature thumbnail stretching in export creature screen

- Fix rovers being able to target creatures in the nursery